AWS Solutions Architect Associate - Everything You Need To Know

Passing the AWS Solutions Architect Associate exam is no mean feat and this blog post is a testament to that. Below you will find all of the information required to get you over the line in the exam. Don’t forget it is important to use more than one resource when studying for an exam as in depth as the SAA. For a video course I cannot recommend Adrian Cantrill’s Solutions Architect Course enough - it is probably the best value for money cloud course on the internet. In fact much of the post below is composed from my notes for that exact course.

KEY

BLUE - Important Words and Concepts

Purple - AWS Specific Services, Tools and Concepts

RED - Code Snippets (JSON, YAML etc.)

Cloud Basics

There are some cloud basics to keep in mind when designing cloud solutions. A good solution should adhere to the five principles below:

- On-Demand Self-Service - Provision and Terminate using a UI/CLI without human interaction

- Broad Network Access - Access services over any networks on any devices, using standard protocols and methods

- Resource Pooling - Cloud providers pool resources that can be used by many different tenants. Take advantage of this.

- Rapid Elasticity - Scale UP and DOWN automatically in response to system load.

- Measured Service - Usage is measured and you pay for what you consume.

Infrastructure solutions can only be cloud if they offer the 5 above principles.

These may seem like quite obvious cloud benefits, the point is that Cloud Solutions Architect is an architecting exam and so answers are geared towards solutions that best take advantage of benefits of the cloud.

Public vs Private vs Multi vs Hybrid

- Public Cloud - Using one public cloud (AWS, GCP, Azure)

- Private Cloud - Using on premises real cloud. Meaning using an on prem solution that offers the 5 above principles.

- Multi-Cloud - Using more than one public cloud

- Hybrid Cloud - Using public and private clouds in conjunction.

- Hybrid cloud is NOT public cloud + legacy on premises. The on prem solution has to be considered 'cloud' as well. (Offers at least the 5 above principles)

High Availability

- HA aims to ensure an agreed level of operational performance, usually uptime for a higher than normal period.

- HA doesn't aim to prevent failures entirely, it is a system designed to be up as much as possible. Often using automation to bring systems back into service as quickly as possible.

- It is about maximising a systems online time.

- Good High Availability is all about redundancy. If a server fails you can hot swap in a standby server. This way the downtime is only the time in which it takes to switch. Without this you would have to diagnose and fix the failure before the system could be available again.

Fault Tolerance

- Similar to HA but is different.

- Fault Tolerance is the property that enables a system to continue operating properly in the event of the failure of some of its components.

- If the system has faults then it should continue operating properly. FT means a system should carry on operating through a failure without bringing down the system.

- HA is just about maximising uptime, Fault Tolerance is about operating through failure.

Difference between Fault Tolerance and High Availability:

- You're in a Jeep driving through the desert, you get a flat tire. No worries you have a spare (redundancy) you stop the jeep and swap in your spare tire and then carry on driving. This is high availability, you didn't have to fix the existing tire as you had a spare to swap in which massively reduced the amount of time the Jeep wasn't running.

- Now you're in a plane and an engine fails. You can't just stop the plane or you fall out of the sky and everyone dies. Instead the other 3 engines are designed to pick up the slack, This is fault tolerance the plane (the system) is designed so that if one crucial element fails there are already redundancies running to prevent any downtime whatsoever.

- In High Availability you may have a main server and a back up server, in the event of a failure you switch to the back up server. There is minimal downtime in the switch but there is some and it can cause disruption to users eg. having to re log in.

- In Fault Tolerance both servers are connected to the system at all times and the system can run on either one or both simultaneously without negative effects. This way if a server fails there is no downtime during switch and no user disruption as the remaining server was always connected and part of the session.

- Fault Tolerance is a lot more expensive than High Availability and it is harder to design and implement.

Disaster Recovery

- A set of policies, tools and procedures to enable the recovery or continuation of vital technology infrastructure and systems following a natural or human-induced disaster.

- Pre-plan the processes and documentation for what to do in the event of a disaster.

- DR is designed to keep the crucial and non replaceable parts of your system safe in the event of a disaster.

AWS Accounts

Different to the users using the account. Big projects and businesses typically use many AWS accounts.

At a high level an AWS account is a container for identities and resources

Every AWS account has a unique Account Root User with a unique email address.

The ARU has full control over that one specific AWS account and the resources inside it. The ARU cannot be restricted.

This is why you need to be very careful with the Account Root User.

Even though the ARU can't be restricted, you can create other identities inside the account that can be restricted.

These are created with Identity and Access Management (IAM).

Identities start out with no or limited access to the account and can be granted permissions as needed.

AWS accounts can contain the impact of admin errors, or exploits by bad actors. Use separate accounts for separate things.

Eg. a Development Account, Test Account and Prod Account. This way you can limit problems to specific accounts rather than letting them affect your entire business.

Multi Factor Authentication

Factors - different pieces of evidence which prove identity.

4 common factors:

- Knowledge - something you know, usernames and passwords

- Possession - Something you have eg. bank card, MFA Device

- Inherent - Something you are, fingerprint, face, voice.

- Location - A physical location, which network (corporate or Wifi)

More factors means more security and harder to fake.

A username and password are both things you know. So by themselves they are single factor as they only use the knowledge factor.

Billing Alerts

My billing dashboard - central location for managing costs.

Cost Explorer - extensive break down of costs

Good Initial Account Setup

- Add Multi Factor Auth for the root user (My security credentials)

- Tick all billing preferences boxes (Billing Dashboard)

- Add a Billing Alert for usage (cloudwatch - Make sure in N. Virginia region)

- Enable IAM User & Role Access to billing (my account)

- Add account contacts (myaccount -> alternate contacts)

- Create IAM identities for regular account use

IAM - Identity & Access Management

Best practice is to only use root user for initial account set up. Afterwards utilise IAM identities. This is because there is no way to restrict permissions on root user.

IAM Basics:

There are 3 types of IAM identity objects: Users, Groups and Roles

- Users - Identities which represent humans or applications that need access to your account.

- Groups - Just collections of related users. eg. Development Team, Finance, HR

- Role - Can be used by AWS Services or for granting external access to your account. Generally used when the number of things you want to grant access is uncertain. Eg. you want to grant all EC2 instances access but you wouldn't know exactly how many there will be.

IAM Policy: Allow or deny access to AWS services when attached to IAM Users, Groups or Roles. On their own they do nothing, they simply allow or deny access to services. Only when you attach them to an IAM Identity do they provide a function.

IAM has three main jobs:

- Identity provider (IDP) create identities such as Users and Roles

- Allows you to authenticate those identities. Generally with a username and password.

- Authorises access or denies access to AWS Services and tools

More on IAM:

- Provided at No Cost (with some exceptions)

- Global Service with Global Resilience it has one global database for your account.

- Only controls what its identities can do. Allows or Denies based on IAM policies.

- Allows you to use identity federation and MFA

IAM Access Keys:

Keys allow you to access AWS Services via means other than the AWS Management Console eg. AWS CLI

- An IAM User has 1 Username and 1 Password

- An IAM User can have two access keys - This is to allow users to rotate in new access keys and rotate out old ones

- Access keys can be created, deleted, made inactive or made active.

- Made up of an Access Key ID and a Secret Key - AWS will only show you the Secret Key once when created and never again after.

Setting up keys on AWS:

- Go to top right of AWS Management Console where log in drop down is -> my security credentials

- Click Create access key

- Note down both Access Key ID and Secret Key, AWS will only show you this Secret Key once. You can also download the keys in a CSV.

Install AWS CLI:

- Test installation by typing 'aws --version' in your standard command line.

- If installation successful it should print the version you are using.

- If not you may need to restart the machine.

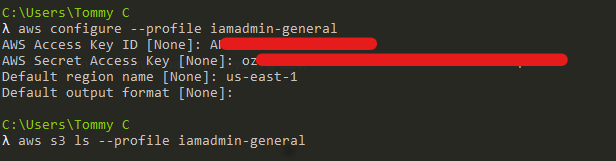

Configure your AWS Keys on AWS CLI:

- aws configure --profile iam-username

- Enter AWS Access Key ID

- Enter Secret Access Key

- Enter Default Region eg. us-east-1

- Enter Default Output format. Can leave as None (just press enter) and will default to JSON

- Test set up with a command eg. aws s3 ls --profile iam-username # If you don't specify the --profile then it won't know where to look and won't work.

- Output will be empty string if you have no s3 buckets set up on account, or a list of s3 buckets if there are some set up. If setup was incorrect there will be an error message.

YAML - 'YAML Ain't Markup Language' BASICS

One of the languages used by CloudFormation (Infrastructure as Code)

A Language which is human readable and designed for data serialization. For defining data or configuration.

At a high level a YAML document is an unordered collection of Key:Value pairs.

Key:Value explained:

Say you have 3 dogs you can put their names in a key value pair:

dog1:Rover

dog2:Rex

dog3:Jeff

Note that these Keys and Values are all strings but YAML supports other types such as: Numbers, Floating Point (decimals), Boolean (true or false) and Null.

YAML also supports Lists:

mydogs: ["Rover", "Rex", "Jeff"] # This is inline formatting

These are comma separated elements enclosed within [square brackets]

You can format lists in another way (not inline):

mydogs:

- "Rover"

- 'Rex'

- Jeff

Notice how you can use double quotes, apostrophes or neither. All are valid syntax, enclosing in quotes or apostrophes can be more precise with typing (strings, numbers etc.)

Indentation matters in YAML in the mydogs list immediately above (not inline) Rover, Rex and Jeff are all indented the same amount so YAML knows these are all part of the same list.

This means you can nest lists in lists by using the same indentation.

YAML Dictionary:

mydogs:

- name: Rover

colour: [black, white]

- name: Rex

colour: "Mixed"

- name: Jeff

colour: "brown"

numoflegs: 3

In this case, mydogs is a list of dictionaries.

Notice how there are 7 lines but only 3 hyphens, well each hyphen denotes the start of a dictionary which is an unordered set of Key:Value pairs.

You can tell all 3 items in the list are part of the mydogs Key as there is only one level of indentation.

You can also have lists within dictionaries, notice in the Key:Value pair for Rover's Colour there is an inline list [black, white]

Using YAML Key:Value Pairs, Lists and Dictionaries allows you to build complex data structures in a way which is human readable. In this case just a simple database of a persons dogs.

YAML files can be read into an application or written out by an application and is commonly used for storage and passing of configuration (infrastructure as code).

JavaScript Object Notation (JSON) BASICS

JSON is an alternative format which is used in AWS. Where YAML is generally only used for CloudFormation, JSON is used for both CloudFormation and other things like IAM Policy Documents.

JSON is a lightweight data interchange format. It's easy for humans to read and write. It's easy for machines to parse and generate.

JSON doesn't really care about indentation as everything is enclosed in something. Due to this it can be a lot more forgiving than YAML around spacing and positioning.

A Few definitions to be aware of to get to grips with JSON:

An object is an unordered set of key:value pairs enclosed by {curly braces}

{"dog1": "Rover", "Colour": "White"} #This is the same as a dictionary in YAML

An array is an ordered collections of values separated by commas and enclosed in [square brackets]

["Rover", "Rex", "Jeff"] # This is the same as a list in YAML

Values in JSON can be Strings, Objects, Numbers, Arrays, Boolean or Null

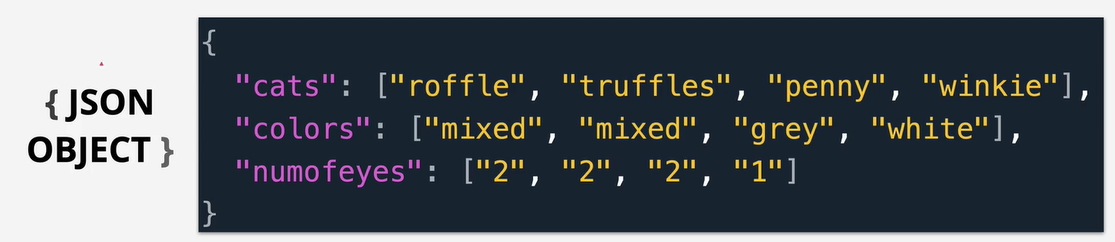

Example of a simple JSON document:

Notice how the entire document is enclosed in {curly braces} this is because at a top level a JSON document is simply a JSON object.

In the above example there are 3 keys: cats, colors and numofeyes. Each value for these keys is an array (list).

JSON doesn't require indentation as it uses speech marks, curly braces and square brackets. However indentation will make it easier to read.

Networking Basics

OSI 7-Layer Model

The OSI Model is conceptual and not always how networking is implemented but it is a good foundation.

The term networking stack refers to this, the software the performs each of the below functions:

There are 2 groups in the OSI model:

- Physical, Data Link and Network comprise the Media Layers - Deals with how data is moved between Point A and Point B, whether locally or across the planet.

- Transport, Session, Presentation and Application comprise the Host Layers - How the data is chopped up and reassembled for transport and how it is formatted to be understandable by both ends of the network.

A layer one device just understands layer 1.

A layer three device has layer 1, 2 & 3 capability.

And so on...

Layer 1: Physical

Physical medium can be copper (electrical), fibre (light) or WIFI (RF).

Whatever medium is used it needs a way to carry unstructured information.

Layer 1 (Physical) specifications define the transmission and reception of RAW BIT STREAMS between a device and a shared physical medium (copper, fibre, WIFI). Defines things like voltage levels, timing, rates, distances, modulation and connectors.

For instance transmission of data between two hypothetical laptops can happen because both laptops network cards agree on the layer one specifications, this enables 0s and 1s to be transferred across the shared physical medium.

At layer one there is no individual device addresses. One laptop cannot specifically address traffic at another, it is a broadcast medium. Everything else on the network receives all data sent by one device, this is solved by layer 2.

If multiple devices transmit at once - a collision can occur. Layer 1 has no media access control and no collision detection.

Layer 2: Data Link

One of the most critical layers in the entire OSI model.

A layer 2 network can run on any type of layer 1 network (copper, fibre, WIFI etc.).

There are different layer 2 protocols and standards but one of the most common is Ethernet.

Layer 2 introduces MAC addresses, these are 48 bit addresses that are uniquely assigned to a specific piece of hardware. The MAC address on a network card should be globally unique.

Layer 2 introduces the concept of frames:

Layer 2 fundamentally encapsulates data in frames. Encapsulation is important to know as each layer of the OSI model performs some encapsulation of data as the data is passed down the layers.

A frame contains a preamble of 56 bits (prior to the mac addresses) this is to indicate the start of a frame.

Then you have the MAC header which is the destination MAC address, the source MAC address and finally the Ether Type which is the Layer 3 protocol being used to send the data (eg. TCP/IP)

Following on from the MAC header is the Payload, this is the actual data the frame will carry from source to destination. This data is typically provided by the Layer 3 protocol (as defined in the MAC header).

The layer 3 data is put into a layer 2 frame, this frame is sent across the physical medium by layer 1 to a different layer 2 destination. The payload data is then extracted and given back to layer 3 at the destination. It knows which Layer 3 protocol to hand the data off to at destination because it is defined in the frame by the Ether Type.

Finally at the end of the frame there is the CRC Checksum which is used to determine any errors in the frame. It allows the destination to check if there has been any corruption.

It is critical to understand that Layer 2 requires a Layer 1 network in order to function. Layer 2 sits on top of Layer 1. Layer 2 can prevent collisions and data corruption by ensuring that there is not currently data being transmitted by another device before it transmits its own data. In this way Layer 2 adds access control to the layer 1 physical medium.

Layer 2 gives us:

- Identifiable devices - frames can be uniquely addressed to other devices

- Media Access Control - Layer 2 controls access to Layer 1

- Collision prevention and detection

Layer 3: Network

Layer 3 gets data from one location to another.

Layer 3 is a solution that can move data between layer 2 networks, even if those layer 2 networks use different layer 2 protocols.

Internet Protocol (IP) is a layer 3 protocol which adds cross-network IP addressing and routing to move data between Local Area Networks without direct P2P links. So you can move data between two local networks across a continent without a direct link between the two. IP packets are moved step by step from source to destination via intermediate networks using routers. Routers are Layer 3 devices.

Packet Structure:

Where as with layer 2 frames the source and destination addresses are generally part of the same local network, the source and destination at layer 3 could be on different sides of the planet.

layer 3 Packets are placed in the payload part of a layer 2 frame.

Every packet has a Source IP Address generally the device address that generated the request. They also have a Destination IP Address where the packet is intended to go.

Additionally as in the diagram each packet has a protocol attached to it. These are Layer 4 protocols for instance TCP or UDP. This field means at the destination the device knows which layer 4 protocol to pass the data in the packet to.

Time-to-live defines the maximum number of hops the packet can take before being discarded, this ensures that if it can't be routed correctly to its destination it doesn't just bounce around forever.

Data contains the data being carried in the packet.

IP Addressing:

This will cover IPv4 but not IPv6 which is covered later on.

Example of an IP address: 133.33.3.7

This is known as Dotted-Decimal-Notation four numbers from 0-255 separated by dots.

All IP addresses are formed of two different parts:

- The first two numbers are the 'network part' - These numbers represent the network itself.

- The second two numbers are the 'host part' - These two numbers are used by devices on the network.

So 133.33 will represent someone's network

3.7 will then represent a device on that network (the devices address being 133.33.3.7)

If the network part of two IP addresses match, it means they're on the same IP network. If not they are on different networks.

An IP address is made up of four 8 bit numbers for 32 bits in total. Each 8 bit number then is sometimes referred to as an octet

IP addresses are either statically assigned by humans or they can be dynamically assigned by a protocol. On a network IP addresses need to be unique.

Subnet Masks:

These allow a device to determine if an IP address is local or remote. Is it on the same network or not.

They essentially specify to a device which part of an IP address represents the network and which part represents hosts on that network.

An in depth definition can be found here.

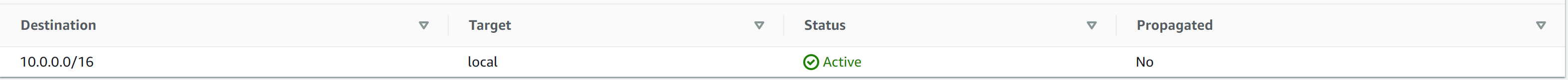

Route Tables & Routes:

Every router has an IP Route Table. When a packet is sent, the router reads the destination IP address and checks it against its table to see if there is a matching entry for where it can then send the packet on to.

The more specific the match the better, for instance if you want to find 64.216.12.33 then the router may have both 64.216.12.0/24 and 0.0.0.0/0 in its table, these would both match.

This is because the /24 essentially says the first 24 bits have been filled and this IP address represents a network comprising all 255 addresses possible: 64.216.12.(0-255).

So this matches because 64.216.12.33 is one of those 255 addresses. Specifically it is a device / server on the 64.216.12.0/24 network.

0.0.0.0/0 also matches 64.216.12.33 because 0.0.0.0/0 literally matches all IP addresses. In the same way /24 represents 255 addresses (24 bits filled in) /0 represents all IP addresses (no bits filled in). So from the Routers point of view 0.0.0.0/0 is a network of all IP addresses and so the IP address you are looking for would be part of this. However as there is a more specific option in 64.216.12.0/24 the router will choose to send the packet to the destination corresponding to 64.216.12.0/24 in its IP route table.

0.0.0.0/0 is often used as a default destination in a route table. If no other match can be found then this address will always match so the router sends the packet to whatever destination is specified by 0.0.0.0/0 presumably a more in depth IP Route Table.

Address Resolution Protocol (ARP):

Provides the MAC Address for a given IP address.

In order to put the Layer 3 data into a Layer 2 frame you're required to specify the Destination MAC Address in the frame.

So ARP runs between Layer 3 and Layer 2 in order to get the Layer 2 Mac Address from the Layer 3 IP Address.

Layer 4: Transport

Layer 4 Transport runs over the top of the network layer and provides most of the functionality to support the networking we use from day to day.

Layer 4 solves issues that can arise with just Layer 3 protocols:

- The Layer 3 IP protocol sends packets from one location to another but doesn't have a way to ensure they arrive in order or if they do arrive out of order how to piece the packets together into coherent data. Packets can arrive out of order because Layer 3 routing is 'per packet' so each packet can take a different route dependent on network conditions at the time.

- Additionally with only Layer 3 there is no way to know which packets have arrived at the destination and which haven't.

- IP also offers no way to separate the packets for individual applications, this means at a Layer 3 level you could only run one application at a time on the network.

- It also offers no flow control. If the source transmits faster than the destination can receive it can saturate the destination causing packet loss

Layer 4 brings two new protocols:

- TCP - Transmission Control Protocol - Slower / Reliable

- UDP - User Datagram Protocol - Faster / Less Reliable

Both of these run on top of IP and add different features dependent on which one is used. TCP/IP is commonly used and this just means TCP at Layer 4 running on top of IP at Layer 3.

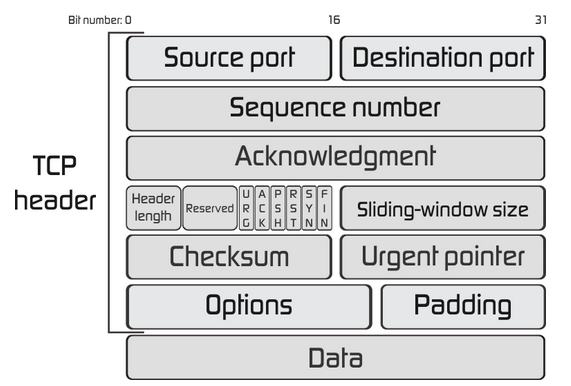

TCP In Depth:

TCP introduces Segments which are just another container like packets and frames. Segments are contained in (encapsulated within) IP packets. The packets carry the segments from source to destination. Segments don't have source and destination IP addresses because they use the packets to do this instead.

Structure of a segment:

TCP segments add Source and Destination Ports. This gives the combined TCP/IP protocol the ability to have multiple data streams running at the same time between two devices.

The Source & Destination Ports and IP addresses together identify a single conversation (data stream) happening between two devices.

The Sequence Number is a way of uniquely identifying a particular segment in a data stream and is used to order data. So even if the packets turn up out of order they can be reordered correctly by the segment sequence number.

The Acknowledgement is the way that one side can indicate it has received up to and include a certain sequence number. Essentially how one side of the conversation can communicate which data packets might need resending in case they have been lost. This is what makes TCP more reliable than UDP it includes acknowledgements so the sender of data knows which packets have and haven't arrived. Check out this video which explains this concept and TCP/IP really well.

The Sliding Window Size solves the flow control problem. Defines the number of bytes indicated by the receiver that they are willing to receive between acknowledgements. This means that if this limit is reached the sender will stop sending data until they receive another acknowledgment, in this way the receiver is not overloaded (saturated) and data isn't lost.

TCP is a connection based protocol. A connection is established between two devices using a random port on a client and a known port on the server (eg. HTTPS 443). Once established the connection is bi-directional and it is considered reliable.

Important TCP Ports:

- tcp/80 - HTTP

- tcp/443 - HTTPS

- tcp/22 - SSH

- tcp/25 - SMTP (email)

- tcp/21 - Telnet

- tcp/3389 - Remote Desktop Protocol

- tcp/3306 - MySQL/MariaDB/Aurora

Network Address Translation (NAT):

NAT is designed to overcome IPv4 shortages.

It translates Private IPv4 addresses to Public addresses.

- Static NAT - 1 private device IP is assigned to a specific public address, useful when a particular private IP needs to have a consistent public IP. This is used by the Internet Gateway (IGW) in AWS.

- Dynamic NAT - 1 private device IP picks the 1st available public address from a pool. This public IP can be different the next time around. Multiple private devices can then share 1 public IP.

- Port Address Translation - Many private addresses to 1 public address. The method the NAT Gateway uses in AWS.

NAT also offers more security because it obscures the IP addresses of resources on a private network. This way outside actors can only know the IP address of the NAT device but not the addresses of the resources it routes to in the private network.

See this video for a good overall explanation of NAT.

IP Addressing

- IPv4 is still the most popular network layer protocol on the internet.

- IPv4 goes from 0.0.0.0 -> 255.255.255.255 = 4,294,967,296 addresses. ( not enough considering 8 billion population and lots of people have more than one device)

- All public IPv4 addressing is allocated. You have to be allocated these addresses.

- Part of the address space in IPv4 is private and can be used and reused freely within private networks.

There are 3 ranges of IPv4 addresses that can be used in private networks and can't be used as public addresses.

- The first private range is Class A: 10.0.0.0 - 10.255.255.255 this is just 1 network and it provides a total of 16.7 million addresses. This is generally used in cloud environment private networks and is usually chopped up into smaller subnets.

- The second private range is Class B: 172.16.0.0 - 172.31.255.255 this is a collection of 16 networks (16-31) each network contains 65,536 addresses.

- The third private range is Class C 192.168.0.0 - 192.168.255.255 this is a collection of 256 networks each containing 256 addresses. This is generally used in home and small office networks.

You can use these ranges however you like, but you should always aim to allocate non overlapping ranges to all of your networks.

IPv4 vs IPv6:

IPv4 has 4.2 billion addresses whereas IPv6 has 340 sextillion addresses. IPv6 has 4 quadrillion addresses per person alive today.

IP Subnetting

Classless inter domain routing (CIDR) lets us take a network of IP addresses and break them down into smaller subnets.

CIDR specifies the size of a subnet via slash notation:

- Slash notation uses prefixes tail -50f /var/log/legerity/fastpost/lol-uat/20220117165138_recoverdb.logeg. /8, /16, /24 and /32

- Lets say you are using a Class A private network. Without subnetting this runs from 10.0.0.0 - 10.255.255.255 or 16.7 million addresses.

- CIDR allows us to specify a small selection of this like 10.16.0.0/16 in this example the first 2 octets are taken leaving the remaining two (0.0) for the subnet (65,536 addresses).

- Essentially we have allocated the address space from 10.16.0.0 - 10.16.255.255 to our subnet via CIDR

- If we were to specify 10.0.0.0/8 this is the same as the entire Class A network, the first octet of the address is taken (10) leaving 0.0.0 - 255.255.255 (16.7 million addresses).

- The larger the prefix value (/16, /24 etc.) the smaller the network.

- You could actually have a /17 network this is basically half a /16 network. So 10.16.128.0/17 is half of 10.16.0.0/16 as the first 128 of the third octet in the /17 are already occupied so it only runs from 128.0 - 255.255.

- Similarly you could have /18 network this is half of a /17 network so 10.16.192.0/18. 192 is halfway between 128 and 255 so it is a /17 network cut in half. Four /18 networks are the size of one /16 network.

- /0 is the entire internet 0.0.0.0

Distributed Denial of Service (DDoS)

- Attacks designed to overload websites

- Uses large amounts of fake traffic to compete against legitimate connections

- Distributed - hard to block individual IPs/Ranges in an attempt to stop it

SSL and TLS

SSL - Secure Sockets Layer

TLS - Transport Layer Security

TLS is just a newer and more secure version of SSL.

They both provide privacy and data integrity between client and server.

TLS benefits:

- Ensures privacy by making communications between the client and server encrypted.

- Identity verification allows the client to verify the server and vice versa.

- Provides a reliable connection - protects against data alteration in transit.

Domain Name System (DNS) Basics

- DNS is a discovery service

- Translates machine into human and vice versa.

- For example humans use www.amazon.com to access Amazon but this needs to be translated into an IP address. DNS provides this service.

- DNS is a huge distributed and resilient database

- There are over 4billion IPv4 addresses and even more IPv6 and DNS has to be able to handle that scale.

DNS definitions:

- DNS Client - your laptop, phone, tablet, PC. The device that want's the information on a DNS server.

- Resolver - Software on your device, router or server which queries DNS on your behalf.

- DNS Zone - Part of the DNS database, every website URL has it's own Zone.

- Zonefile - The physical database for a zone.

- Nameserver - where zonefiles are located.

Your DNS Client asks the resolver to find the information for a given URL. The resolver queries DNS to find the correct Zone and Nameserver and then pulls the requisite information from the Nameserver eg. the IP address for which to send data.

AWS Fundamentals

AWS Public vs Private Services

All AWS services can be categorised into one of these two types.

When discussing public and private they relate to networking only. Whether a service is private or public is down to connectivity.

An AWS public service is one that can be connected to from anywhere with an unrestricted internet connection. For instance when you are accessing data stored in S3 you are connecting to it via the internet so S3 is a public service.

S3 sits in the public zone along with other AWS public services.

There is also a private zone in AWS. This by default does not allow any connections between the private zone and anywhere else. The private zone can be subdivided using Virtual Private Clouds or VPCs.

AWS Global Infrastructure

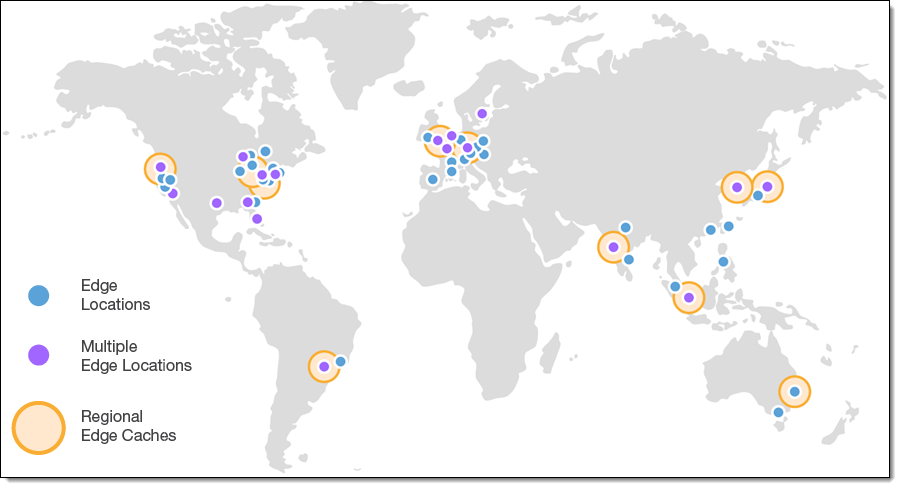

AWS Regions - Areas of the world with a full deployment of AWS infrastructure (compute, storage, DB, AI etc.). AWS is constantly adding regions, some countries have one region others have multiple regions. Regions are geographically spread so you can use them to design systems that are resilient to disasters.

AWS Edge Locations - Much smaller than regions but much more plentiful. Allows services to store data and resources as close to customers as possible. They don't offer the same breadth of services as Regions. Think more like Netflix needing to store its TV and Film data close to as many customers as possible to reduce latency.

Some services are region specific like EC2 so each needs its own individual deployment in each region. Others like IAM are global.

Regions have 3 main benefits:

- Geographic Separation - Isolated Fault Domain

- Geopolitical Separation - Different Governance - your data and infrastructure is governed by the rules of the government of that region.

- Location Control - Place infrastructure as close to customers as possible. Easily expand into new markets.

Regions can be referred to by their Region Code or Region Name.

For instance the region in Sydney is:

Region Code: ap-southeast-2

Region Name: Asia Pacific (Sydney)

If you had infrastructure in the Sydney region and a mirror of it in the London region then if Sydney failed it wouldn't affect your London infrastructure as it is isolated.

Availability Zones:

You will also want resiliency within regions, and AWS provides Availability Zones (AZ)for this. AZs are isolated "datacentres" within a region, as a Solutions Architect you can distribute your systems across AZs to build resilience and provide high availability.

You can place a private network across multiple Availability Zones with a Virtual Private Cloud (VPC).

Resilience Levels:

Globally Resilient - A service distributed across multiple regions, it would take the world to fail for the service to experience an outage. Examples of this are IAM and Route 53

Region Resilient - Services that operate in a single region with one set of data per region. They operate as separate services in each region and generally replicate data across multiple AZs within the region. If the region fails the service will fail.

AZ Resilient - Services run from a single AZ, if that AZ fails then the service will fail.

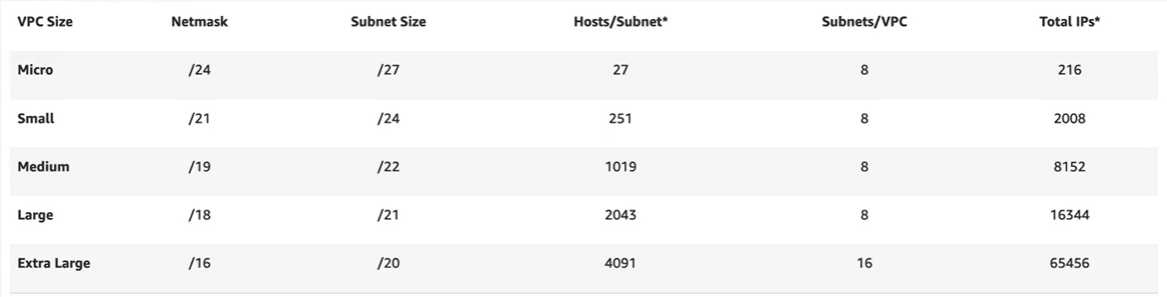

Virtual Private Cloud (VPC)

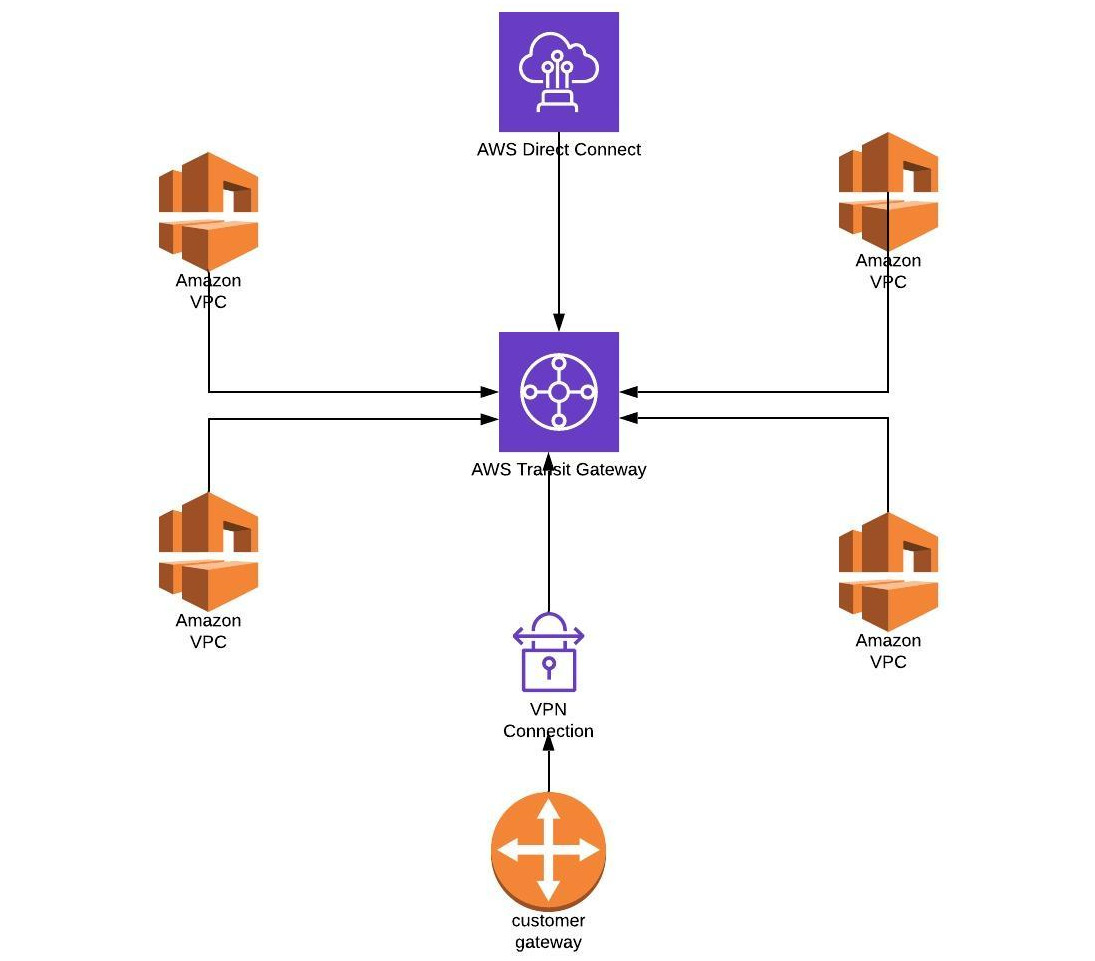

VPC is the service used to create private networks inside AWS that other private services will run from. It is also the service that connects your private networks with on-prem networks when you are building a hybrid solution. Additionally it can connect AWS with other cloud providers when using a multi cloud solution.

A VPC is a virtual network inside AWS.

A VPC is within 1 account and 1 region. They are regionally resilient as they operate across multiple AZs within one region.

By default a VPC is private and isolated unless you decide otherwise. Resources can communicate with other resources within the VPC itself.

There are two types of VPC in a region - The Default VPC and Custom VPCs. You can only have ONE Default VPC per region. You can have many Custom VPCs in a region.

As the name suggest Custom VPCs can be configured anyway you want and are 100% private by default. Unless configured otherwise there is no way for Custom VPCs to communicate outside of their own private network.

Default VPCs are initially created by AWS in a region and are very specifically configured making them more restrictive.

VPC Regional Resilience:

Within a region every VPC is allocated a range of IP Addresses, the VPC CIDR.

Everything inside that VPC uses the CIDR range of that VPC.

Custom VPCs can have multiple CIDR ranges, the Default VPC always has one range and it is always the same: 172.31.0.0/16

The way in which a VPC can provide resilience is that it is subdivided into subnets and each subnet is placed in a different Availability Zone.

The Default VPC is always configured in the same way - it has one subnet in every availability zone in its region. So if a region has 3 AZs the Default VPC will have 3 subnets, one in each with the CIDR block 172.31.0.0/16 split evenly across those 3 subnets.

VPCs IN AWS

In your AWS account you can view the Default VPC by typing VPC in the search bar. Then select "Your VPCs" you will then see one or more VPCs and one of them will be set as the Default VPC.

You can view the subnets set up for this VPC (one in each AZ) in the Subnets section under Your VPCs.

All regions on your account will have a Default VPC - when you have Your VPCs or Subnets open switch to a region you have never used before and you will still see a preconfigured Default VPC.

In the same section you will see along with the Default VPC a Network ACL and Security group are also pre-created. The can be see under the security tab in the sidebar. Additionally an Internet Gateway is pre-created.

You can delete a Default VPC:-- Your VPCs -> Select the Default VPC -> Then Delete it.

You can recreate a Default VPC if it has been deleted. Go to actions and Create Default VPC. You do not use the 'Create VPC' button for this as that is only for Custom VPCs.

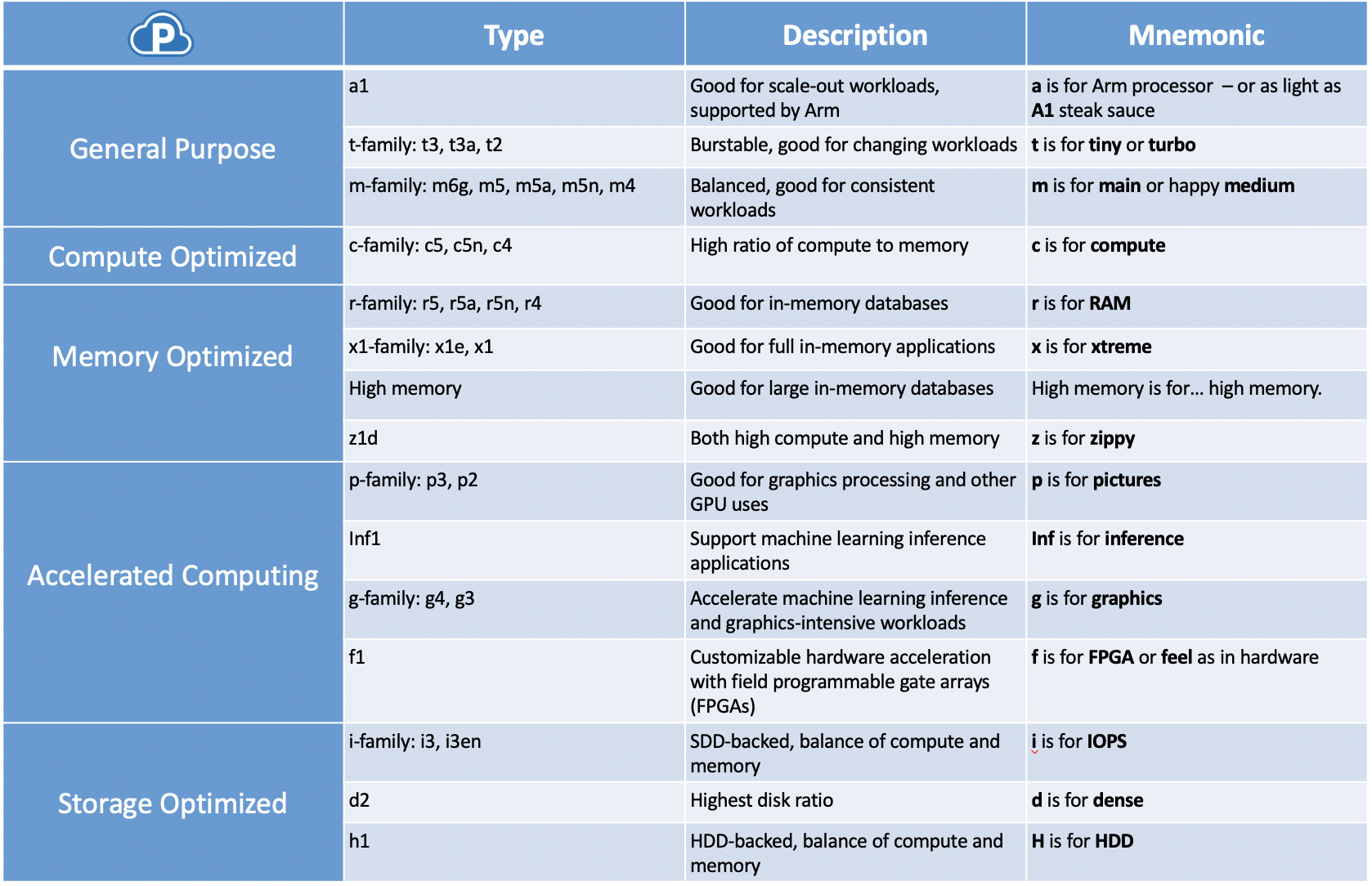

Elastic Compute Cloud (EC2)

The default starting point for any compute requirement in AWS.

- EC2 is IAAS - Provides Virtual Machines known as instances

- Private service - Configured to launch into a single VPC subnet you have to allow public access (internet) as by default it is private.

- EC2 is only AZ resilient if the AZ fails the EC2 instance itself fails. This is because it is launched in one subnet and a subnet exists in one AZ.

- Different sizes and capabilities are available to choose from when configuring EC2 instances.

- Offers on demand billing - you only pay for what you consume.

- Two main types of storage - Local on Host Storage or Elastic Block Store (EBS)

EC2 instances have an attribute called a state. They can be in one of a few states, most commonly:

- Running

- Stopped

- Terminated

When an instance launches, after it provisions it moves into a 'running' state. It moves from 'running' to 'stopped' when you shutdown the instance. Or vice versa when you start the instance back up.

An instance can also be terminated, it is one way - a non reversible action, it is fully deleted.

At a high level EC2 instances are composed of a CPU, Memory, Disk and Networking.

- When an EC2 instance is running you are charged for all 4 of these aspects. It consumes CPU even while idle, it uses memory even when no processing occurs, it's disk is allocated even if unused and additionally networking will be in use.

- When an instance is stopped, No CPU resources are consumed, no memory is used either. So you are not charged for these as it is not running. Also there are no network charges. However storage is still allocated regardless of it's running or stopped. So you will still be charged for EBS Disk Storage if an EC2 is stopped.

Amazon Machine Image (AMI):

An AMI is an image of an EC2 instance.

It can be used to create an EC2 Instance or an AMI can be created from an EC2 Instance.

An AMI can have 3 different permissions settings:

- Public - Everyone can see and use it.

- Owner - Only the owner of the AMI.

- Explicit - Specific AWS accounts are allowed to use it.

Simple Storage Service S3

- S3 is a global storage platform - region resilient (replicated across AZs in a region)

- It is a public service (accessed anywhere via an internet connection) allows for storage of unlimited data and can be used by multiple users.

- Stores nearly all data types Movies, Audio, Photos, Text, Large Data Sets etc.

- Economical Storage solution and can be accessed via the UI / CLI / API & HTTP

- Two main elements to S3 - Objects & Buckets. Objects are the data S3 stores (pictures, videos etc), Buckets are containers for objects.

Objects:

- You can think of objects like files.

- Each object has a key (like a filename) so you can access that particular object in a bucket.

- Each object also has a value - the data comprising the object eg. the data that makes up an image. An object can be anywhere from 0 bytes (empty) to 5TB. This makes S3 very flexible.

Buckets:

- Created in a specific AWS region.

- This means the data inside your bucket has a primary home region.

- S3 Data in a bucket never leaves that region unless you configure the data to leave the region. This means it has stable sovereignty you can keep your data in one specific region ensuring it stays under one set of laws.

- If a major failure occurs (natural disaster or large scale data corruption) S3 impacts will be contained to the region the bucket is in as data doesn't leave it's region without configuration.

- Every bucket has a name and it has to be globally unique (across all AWS accounts and buckets). If any other AWS account anywhere in the world has created a bucket no other bucket can have the same name.

- A bucket can hold an unlimited number of Objects. It is infinitely scalable.

- Buckets have a flat structure - all objects are stored at the same level (no nesting)

- Objects in a bucket can be named using prefixes (/pic/cat1.jpg, /pic/cat2.jpg, /pic/cat3.jpg) the bucket will recognise these cat jpgs as related and present them in a folder called 'pic' (however this isn't a real file structure the bucket structure is still flat).

Important info for CSA Exam:

- Bucket names are globally unique.

- Bucket names have 3-63 Characters, all lowercase, no underscores.

- Bucket names start with a lowercase letter or a number.

- Bucket names can't be IP formatted e.g. 1.1.1.1

- An account is limited to 100 buckets but this can be increased to 1,000 using support requests (1,000 is a hard limit).

- Unlimited number of objects in a bucket with each object ranging from 0 bytes to 5TB.

S3 Patterns and Anti Patterns:

- S3 is an object store - not file or block

- You can't browse S3 like you would a windows file system and you can't mount an S3 bucket like you would a drive.

- S3 is great for large scale data storage, distribution or upload.

- Great for 'offload' - Say you have a blog with lots of images and data you can keep this data in an S3 bucket rather than on an expensive EC2 instance and configure your blog to point users to S3 directly.

- It is the Default INPUT and/or OUTPUT to MANY AWS products. Most of the time the ideal storage solution for an AWS service is S3 (useful for the exam)

CloudFormation Basics

Tool which lets you create, update and delete infrastructure in AWS in a consistent and repeatable way using templates.

A CloudFormation template is written in either YAML or JSON.

CloudFormation Templates:

- All templates have a list of resources. This tells CloudFormation what to do. The resources section is the only mandatory part of a template.

- AWSTemplateFormatVersion this can come at the start of a template and is used to specify the template version. If it is omitted then the version will be assumed.

- Templates can have a description section, this allows the author to note down what the template is for and what it does. This is to help other users of the template. If there is a description section it must follow the AWSTemplateFormatVersion.

- Templates can have a metadata section, this controls how things will be set out in the Console UI.

- The Parameters section allow you to add fields which users must enter information into in order to use the template (eg. Instance Size?)

- Mappings section allows you to create lookup tables.

- The Conditions section allows decision making in the templates. You can set certain things that will only occur if the condition is met.

- The Outputs section is what the template should return once the template is finished being used by CloudFormation.

When you give a template to CloudFormation it creates what is known as a Stack. This Stack is a logical representation of the resources specified in the template. Then for each logical representation of a resource in the stack CloudFormation creates a matching physical resource within your AWS account.

You can adjust a template, when you do the Stack will change and so CloudFormation will adjust the physical infrastructure to match.

You can also delete a stack in which case CloudFormation will adjust physical infrastructure to match (by deleting them).

CloudFormation allows you to automate infrastructure. It can also be used as part of change management.

Cloudwatch Basics

This Collects and manages operational data.

Cloudwatch performs 3 main jobs:

- Metrics - CW monitors + stores metrics and can take actions based on them. These are Metrics from AWS products.

- Cloudwatch Logs - Storing and taking actions based on logs from AWS Products.

- Cloudwatch Events - Takes an action based on a specified event or takes an action based on a set time.

Cloudwatch can also work with non-AWS products, this has to be configure via the CloudWatch Agent. You can use CloudWatch to collect and manage data even from a different cloud platform.

Based on what data Cloudwatch stores:

- It can be viewed via the console

- Can be viewed via the CLI

- Can take an action eg. autoscaling EC2 instances based on usage.

- Can send an alert email via SNS

- + A lot more

CloudWatch Definitions

- Namespace These are a way of separating data with in CloudWatch, they contain related metrics.

- Metrics are a collection of data points in a time ordered structure. Eg. CPU Usage, Network IN/Out, Disk IO.

- Datapoints - for instance every time a server reports its CPU Utilisation that data goes into the CPU Utilisation metric. Each time CPU Utilisation is reported by the server that individual report is called a datapoint. Datapoints feed into metrics.

- Dimensions - These separate datapoints for different things within the same metric. Say you have 3 EC2 instances reporting CPU Utilisation every second and that data is going into the AWS/EC2 Namespace. Each of these EC2 instances will also send 'dimensions' key value pairs like the EC2 instance ID and the type of instance. This allows us to view datapoints for a particular instance.

So using the above you can take as shallow or as deep a look at data as you like. You can go into the AWS/EC2 namespace, choose to look at EC2 CPU Utilisation metric within that Namespace , see individual datapoints. From there you could drill down into the dimensions to see specific data for each EC2 instance.

CloudWatch Alarms

Alarms allow CloudWatch to take actions based on Metrics.

Each alarm is linked to a particular metric. Based on how you configure the alarm CloudWatch will take actions when you deem it should.

For instance a billing alarm with a criteria of 'If monthly estimated bill is > $10' could be configured to send an email to the account owner should that criteria be met. CloudWatch can take a lot more actions than just sending emails.

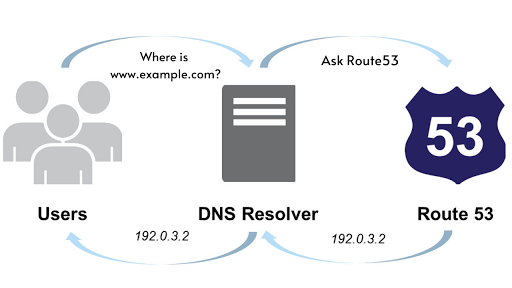

Route 53 Basics

Provides 2 main services:

- Allows you to register domains

- Can host zones on managed nameservers

It is a Global Service with a single database. So you don't need to pick a region when using it. It is globally resilient.

IAM, Accounts and AWS Organisations

IAM Identity Policies

These are a type of policy which get attached to identities in AWS. Identities are IAM Users, Groups and Roles.

Identity Policies also known as Policy Documents are created using JSON. They are a set of security statements governing permissions and access for an identity within AWS.

An identity can have multiple policies attached to it.

Identity Policy Statement Structure:

"Statement": [

{

"Sid": "FullAccess",

"Effect": "Allow",

"Action": ["s3:*"],

"Resource": ["*"]

}

- First is the Statement ID or SID this is an optional field which lets you identify a statement and what it does. This just lets us inform the reader of what this statement actually does.

- Effect - this is either "Allow" or "Deny" this dictates what AWS does based on the action and resource section of the statement. If the action and resource conditions fit then the effect will come into play.

- The action refers to what the statement affects. It can be very specific, the syntax is service colon operation. You can see in the example "s3:*" the service is s3 then there is a colon followed by * meaning all operations.

- Resources refers to specific resources to be affected by the statement.

So you can see in the above statement example, the Effect is "Allow", the action is any action taken on s3 and the resources are all resources (* means all). So if the identity with this policy attached tries to do anything on s3 with any resource it will be Allowed.

It is possible to have contradictory statements within a policy. Understanding how these work is important for AWS security and for the CSA exam. Lets say we have the above example statement allowing full access to S3. In the same policy document we have the following statement:

{

"Sid": "DenyBucket",

"Action": ["s3:*"]

"Effect": "Deny"

"Resource": ["arn:aws:s3:::mypics", "arn:aws:s3:::mypics/*"]

}

Both of these example statements combined are contradictory. The first gives full permissions for s3 to an identity, essentially administrator access. The second denies access to a specific bucket "mypics". So how does AWS reconcile a policy which both gives an identity full access to s3 in one statement and restricts a small portion of s3 in another statement. (Don't worry if you don't yet understand the Resource syntax in the second statement).

AWS reconciles contradictory policies with a few consistent rules:

- Explicit Deny - If there is a statement with the effect "Deny" then that statement always wins. It overrules all others. In the example policy with the two statements above accessing the "mypics" bucket is explicitly denied, that's it nothing overrules so an identity with this policy could not access "mypics".

- Explicit Allow - If there is a statement explicitly allowing something, as in our first statement allowing all access to S3. Then that statement will take effect, UNLESS there is another statement in the policy contradicting the allow with an explicit deny.

- Default Deny - If there is no statement for an action in an identities IAM policy (Deny or Allow) then that action is denied by default. This is an implicit deny AWS assumes to deny permissions as it has not been explicitly told what to do. All identities start off with no access to AWS resources because of this. If explicit access is not given then permissions are denied by default.

This is very important to remember Deny, Allow, Deny (implicit) the order of importance in IAM policy documents.

In the above example the User would be allowed to access all of s3 except any actions involving the specified "mypics" bucket and any objects within it.

As well as contradiction within a single policy it is possible for there to be a contradiction between two separate policy documents. This needs to be reconciled as an identity can have more than one policy document attached. Lets take User1:

- User1 has Policy-A and Policy-B directly attached to their identity

- User1 is also part of an IAM Group for developers as User1 is a developer. This group has it's own policy doc: Policy-C

So User1's actions and permissions are governed by 3 separate policy documents. AWS reconciles any contradictions between these in the same way it would with a single policy document. It gathers all of the policy documents for an identity and looks at them as one entity. It follows the consistent rules of Deny, Allow, Deny (implicit). No policy document takes precedence rather the statements within the policies take precedence based on the Deny, Allow, Deny rules.

If Policy-A explicitly allows something but Policy-C explicitly denies the same thing then the user will be denied permission for that action.

Similarly if none of Policies A, B or C explicitly allow a particular action then that action will be implicitly denied by default.

Two different types of IAM Policy:

Inline - An individual policy document attached to one User / Group. If you wanted to give 3 Users the same permissions using inline it would be 3 separate policy docs. So if you wanted to change anything after you would have to edit all 3 docs.

Managed Policies - A managed policy can be attached (reused for) multiple Users / Groups if you want to edit multiple user permissions then you only need to adjust the managed policy. This is very useful if you have a policy that needs to apply to lots of people as you may need to adjust it at some point and won't want to adjust 50 individual policy docs. These are Reusable and have Low management overhead

So why would you ever use Inline? Generally you only use inline to give a user exceptions to general rules you have in managed policies.

IAM Users and ARNs

IAM Users are an identity used for anything requiring long term AWS access eg. Humans, Applications or service accounts.

ARNs | Amazon Resource Names - These uniquely identify resources within any AWS accounts. These are used in IAM policies to give or deny permissions to resources.

ARN syntax:

arn:partition:service:region:account-id:resource-id

arn:partition:service:region:account-id:resource-type/resource-id

ARNs are collections of fields split by a colon. A double colon means no field specified.

The first field is the partition, this is the partition the resource is in. For standard AWS regions this is AWS, this will normally always just be 'aws'.

The second field is service, this is the service name space to identify a particular AWS service eg. s3, iam, rds.

The third field is region, the region your resource resides in. Some services do not require this for ARNs.

The fourth field is account-id, the account-id of the AWS account that owns the resource. Some resources do not require this to be specified.

The final field is resource-id or resource-type.

Example:

arn:aws:s3:::mypics # This arn references an actual bucket

arn:aws:s3:::mypics/* # This references anything in the bucket but not the bucket itself

The two immediately above ARNs do not overlap. If you want to allow access to create a bucket AND allow access to objects within that bucket then you would need both types in a policy.

In these ARNs you also notice that there are two double colons where region and account-id would normally go. These do not need to be specified with s3 buckets as bucket names are globally unique

Important IAM information for CSA Exam:

- Limit of 5,000 IAM Users per account. - if you have a need for more than 5,000 identities you would use Federation or IAM Roles (more on those later)

- An IAM User can be a member of 10 IAM Groups at maximum.

IAM Groups

IAM Groups are containers for Users. They exist to make managing large numbers of IAM Users easier. You cannot log in to a group they do not have any credentials.

Groups can have policies attached to them. They can either be inline policies or managed policies. There is also nothing to stop individual IAM Users within a group having their own separate policy documents.

There is no limit to how many Users can be in a group. However as there is a limit of 5,000 IAM Users per account the maximum number of Users that can ever be in a group is 5,000.

There is no default All-Users IAM Group, you could create this yourself to hold all of the IAM Users and apply policies to them but it won't be included automatically.

You cannot nest Groups, there are no Groups within Groups.

IAM Roles

IAM Roles are a type of identity which exists within an AWS account. The other type of identity is an IAM User. IAM Groups are not their own identity - they just contain users.

An IAM Role is generally used by an unknown number or multiple principals (people, applications etc.). This might be multiple users inside the same AWS account or humans, applications or services inside / outside the AWS account.

Roles are generally used on a temporary based, something or someone assumes that role for a short time. Roles are not something which represent the user rather they represent a level of access within an AWS account. A Role lets you borrow permissions for a short period of time.

While IAM Users and Groups can have inline and managed policies attached, IAM Roles have two different types of policy that can be attached:

- Trust Policy - Controls which identities can assume a role. If Identity-A is specified as allowed in the Trust Policy attached to a role then Identity-A can assume that role.

- Permissions Policy - Defines what permissions a role has and what resources can be accessed (similar to an IAM User policy document)

When an identity assumes a role AWS generates temporary security credentials for that role. These are time limited. Once they expire the identity will need to renew them by reassuming the role. These security credentials will be able to use what ever services and resources are specified in the permissions policy.

When you assume a Role the temporary security credentials are created by the Secure Token Service (STS) the operation it uses to assume the role is sts:AssumeRole.

Because roles can allow access to external users, they can also be used to allow access between your AWS accounts.

When to use IAM Roles

For AWS Services:

One of the most common uses of Roles within an AWS account are for AWS services themselves. These services operate on your behalf and they need access rights to perform certain functions.

For example you may need to give AWS Lambda permissions to interact with other AWS services in your accounts. To give Lambda these permissions you can create what is called a Lambda Execution Role which has a trust policy which trusts the Lambda Service to assume the role and a permissions policy which grants access to AWS products and services.

If you didn't use a role in this instance then you would need to hard code access keys into each Lambda Function you wanted to use. This is a security risk and it causes problems if you ever need to change or alter the access keys. Additionally you may be using a single Lambda Function or running many of the same function simultaneously. As the number of principals (people, services etc.) is unknown in this case it is best to use a Role.

For Emergency or Unusual Situations:

When someone may need to assume greater permissions than they currently have in exceptional circumstances. All actions they use the role for will be logged and can be reviewed.

When adding AWS to an existing Corporate environment:

If an organisation already has an identity provider like Microsoft AD they may want to offer single-sign-on with these pre-existing identities to include AWS. In that case you can use roles to allow what is an "external user" access to the companies AWS resources.

Another case may be where a company implementing AWS has more than 5,000 identities, you cannot give each one an IAM User as that would be over the limit. In this case you may give these pre-existing identities access to a role so that they can use AWS resources with set permissions.

For architecture for a mobile app:

A mobile application with millions of users needs to store and access data within an AWS database. You can give users of that mobile application access to an IAM Role which gives them the ability to pull data from an AWS database into the app. This is also really useful because as there are no permanent credentials involved with a role there is no chance of AWS credentials being leaked. This allows you to give potentially millions of identities controlled access to AWS resources.

AWS Organisations

A product which allows businesses to manage multiple AWS accounts in a cost-effective way with very little management overhead.

- First you take a single AWS account.

- With it you create an AWS Organisation.

- That account now becomes the Management Account for the organisation. (It used to be called the 'Master Account').

- Using the management account you can invite other existing accounts to the organisation.

- If those account approve the invite they become part of the organisation.

- These account are now known as Member Accounts of that organisation.

- Before joining the organisation the member accounts had their own separate billing methods and bills.

- Organisations consolidate billing of all accounts in the management account (sometimes known as the payer account).

- This means there is now a single monthly bill that covers all of the accounts within the AWS Organisation, this removes a lot of billing admin.

- Additionally some resources get cheaper the more you use and as organisations consolidate billing they are able to benefit more from these volume discounts than any single account would by itself.

You can also create a new account directly within an Organisation.

Organisations change the best practice in terms of user logins and permissions, instead of having IAM Users in every AWS account you have IAM Roles to allow user to access multiple accounts. The architectural pattern is to have a single AWS account contain all of the identities which are logged into, and then use Roles to access other accounts with in the Organisation.

- Organisations have a root container - This is the container for all elements in the organisation.

- Below this there are organisational units OU - These are groupings of accounts, often those that perform similar functions. You might put all of your developments accounts in one OU.

- Organisational Units can be nested so within the Development OU you may have a DevTest OU (containing relevant AWS accounts) and a Staging OU (containing relevant AWS accounts)

- Below that level you have AWS Accounts, these can be outside or inside an OU.

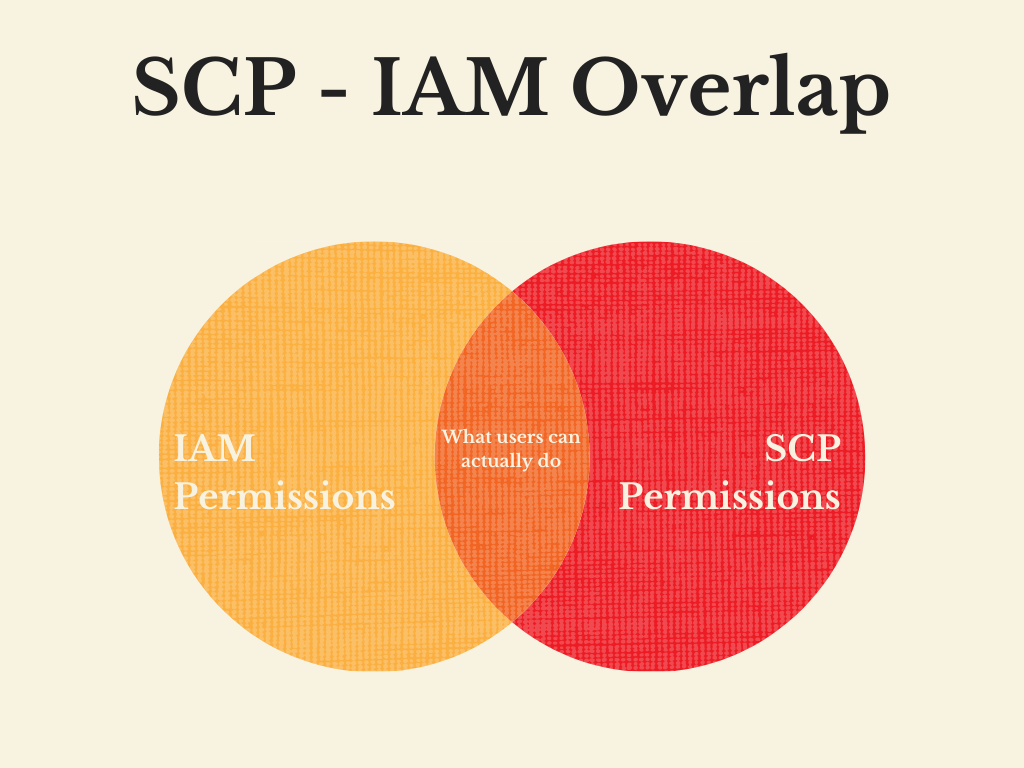

Service Control Policies (SCP)

SCPs are JSON policy documents which can be attached to an organisation as a whole. They can also be attached to one or more organisational units or they can be attached to individual AWS accounts within an organisation.

The Management Account of an Organisation is never affected by service control policies. As the management account cannot be restricted using SCPs it is often a good idea to never use it to interact with AWS resources and instead use other accounts within the organisation which can be permission controlled by an SCP.

SCPs are account permissions boundaries. They limit what accounts (and therefore IAM identities within) are allowed to do, in doing so they can also limit what an account root user can do. This may seem strange as an account root user is not able to be restricted, but you are not directly limiting the root user. Rather think of an SCP as limiting what the account itself can do. The root user of that account still has full permissions to do anything the account can do but if the account can't do something due to an SCP then the root user can't do it either.

Service Control Policies do not grant any permissions - They merely define permission boundaries, they establish which permissions can be granted within an account. you would still need to give identities within accounts permissions to access resources, Any SCPs attached to the account have the ability to limit the permissions that can be given to those identities.

SCPs follow the standard permissions rule of Deny, Allow, Deny (implicit). If something is explicitly denied in a Service Control Policy but is contradicted by an explicit allow in the same SCP or another one then the Deny will always take precedence. If something is not explicitly allowed then it is implicitly denied.

When you first create an SCP Amazon automatically adds a statement to explicitly allow everything - this can be removed or altered.

For example let's say you create an SCP within an account that grants access to only s3, EC2, RDS and IAM. You then create an IAM Identity with an attached policy that allows access to s3, EC2 and Route 53. The SCP defines the permission boundary of the account and supersedes the IAM policy - so the IAM identity would only have access to s3 and EC2 as access to Route 53 is not explicitly allowed by the SCP

CloudWatch Logs

- Public Service - usable from AWS or on-premises (or even other cloud platforms).

- Allows you to store, monitor and access logging data.

- Has built in AWS Integrations including - EC2, VPC, Lambda, CloudTrail, Route 53 etc.

- Can generate metrics based on logs - known as a metric filter - these look for specific elements in logs and picks them out to graph them as data points. Eg. Setting a metric filter to look for errors in your CloudWatch Logs.

- Cloud watch logs is a regional service (not global).

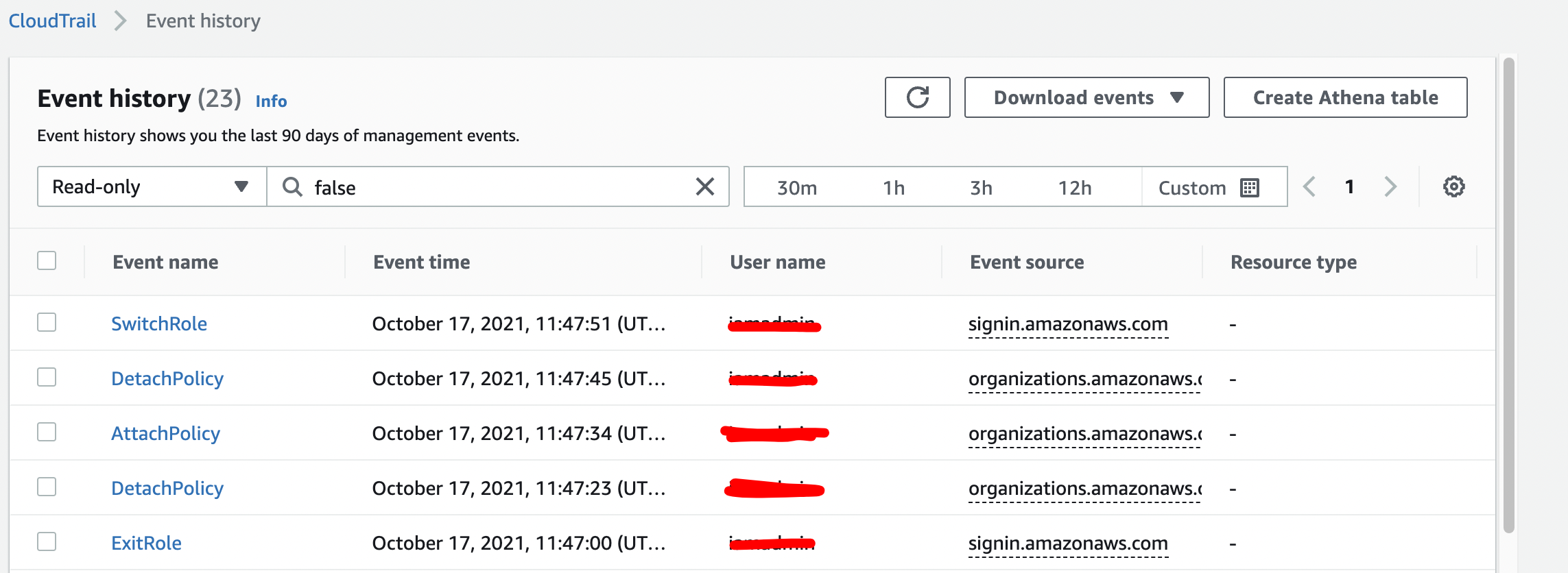

CloudTrail

- CloudTrail is a service that logs API actions which affect AWS accounts. Eg. stopping an instance, deleting an s3 bucket it's all logged by CloudTrail.

- Almost everything that can be done in an AWS account is logged by this service.

- Logs API calls as a CloudTrail Event. Each of these is a record of an activity within an AWS Account.

- Stores 90 days of even history.

- Automatic 90 day store Enabled by default and at no cost.

- You can see all the actions taken in the last 90 days in your CloudTrail Event history.

- To see your CloudTrail Events beyond this 90 day history you need to create a 'Trail' to store the event history.

- CloudTrail events can be one of two types Management Events and Data Events.

- Management events are things like creating an EC2 instance, terminating it, creating a VPC

- Data events contain information about resource operations performed on in or in a resource. Eg. Uploading or accessing objects from s3.

- By default CloudTrail only stores Management events as Data events tend to be much higher volume.

A CloudTrail Trail is how you provide configuration for how CloudTrail should operate beyond default. Trails can be set to single region or all regions. A regional trail would only log regional Events in its own region. This means a regional Trail may not log events in a global service like CloudFront. This is because global services like CloudFront and IAM always log their events to the same one region US-East-1. An all regions Trail encompasses all regions and is automatically updated as regions are added by AWS.

An all regions Trail with global services event logging enabled is listening to all Management events in the account. If you then enable Data event logging for the Trail it will be listening to everything that is happening in the account.

A Trail can store events in a specified s3 bucket, this is how you can keep event history beyond 90 days. When CloudTrail Event logs are stored in s3 they are stored as compressed JSON formatted documents that take up hardly any space.

CloudTrail can also be integrated with CloudWatch logs. CloudWatch logs can then be used to analyse the data with metric filters.

CloudTrail can also have an organisational Trail. This is where one trail logs all of the events in your entire organisation (multiple accounts). Rather than having to set Trails on each account in your org, you can use this.

CloudTrail Need to Knows for the Exam

- Enabled by default but only logs last 90 days

- Trails are how you can configure the data in CloudTrail to be stored in s3 and CloudWatch

- CloudTrail by default only stores Management Events

- Some truly Global Services always log their events to one specific region US-East-1. A regional Trail outside US-East-1 would not capture these events.

- CloudTrail is NOT Real Time - there is a delay (15 mins or so)

S3: Simple Storage Service

S3 Security

S3 is private by default. The only identity that automatically has access to a bucket is the account root user of the account the bucket was created in. Any other permissions have to be explicitly given.

One way to give these permissions is using an S3 Bucket Policy which is a form of resource policy. A resource policy is just like an identity policy but it is attached to a resource instead eg. an s3 bucket.

Differences between identity policies and resource policies:

Where identity policies control what an identity can access, resource policies control who can access the resource. A major difference between the two is that identity policies can only ALLOW or DENY an identity access to resources and actions with in a single account. You have no way to give the identity access to resources in another account. Resource policies can ALLOW or DENY actions and access from the account they are in or completely different accounts. This makes resource policies a great way to control access to particular resource no matter what the source of the access is.

Resource Policies can also ALLOW or DENY Anonymous principals. You CAN'T attach an identity policy to nothing - it always needs an identity to attach to. A resource policy CAN allow complete open access to a resource even if there are no AWS Credentials to assign that ALLOW to. This means a resource policy can allow anonymous non-aws authenticated principals access to a resource.

Resource policies (therefore S3 Bucket Policies) have a Principal which needs to be specified. This principal is who or what the policy is allowing or denying access to. When writing an identity policy you do not need to specify a principal as it is assumed the identity to which the policy is attached is the principal. This means you can identify a resource policy or an identity policy by whether or not it has a principal specified.

{

"Version": "2012-10-17"

"Statement": [

{

"Sid":"PublicRead",

"Effect": "Allow",

"Principal": "*",

"Action": ["s3:GetObject"],

"Resource": ["example:arn:s3::addweflkwf/*]

}

]

}

Above is an example of a resource policy - you can see that there is a principal specified "*" meaning all principals. So any principal can perform the action "s3:GetObject" on the resource specified by this policy.

You can use s3 bucket policies to provide very granular access to s3 based on a variety of factors. You block access to a particular folder, based on identity, IP address, whether your identity uses MFA and more. These policies can be very simple or they can get quite complex.

There can only be one bucket policy attached to a bucket. If an identity accessing a bucket also has an identity policy attached then the access rights to the bucket will be the cumulative effect of the identity policy and the resource policy based upon Explicit DENY, Explicit ALLOW, Default DENY - as discussed earlier.

S3 buckets also have a Block Public Access setting - these settings are configured outside of s3 bucket policies and only apply to anonymous principals. They apply no matter what the bucket policy says. This way if you accidentally configure your bucket policy to allow public access and didn't mean to but you have correctly configured your Block Public Access setting then your mistake won't lead to data leaks.

s3 Static Website Hosting

When we use S3 on the console we are actually accessing it via API. The console is just a visual wrapper for the underlying API. When we access an object in the s3 console we are really using the underlying GetObject API command.

We can use s3 to host static websites which instead of accessing s3 via API allows us to access it via HTTP. When you enable this feature on a bucket AWS creates a Website Endpoint which the bucket can be accessed at via HTTP.

This feature allows you to offload all of the media on a website to s3 - s3 is a far cheaper form of storage than keeping it on a compute service like EC2.

Pricing

- S3 charges a per GB month storage fee. So every Gigabyte you have stored each month incurs a charge, if you pull the data before the month is up then you only pay for the portion of the month you used.

- There are also data transfer charges. You are charged to transfer data OUT of s3 (per GB) but you are never charged to transfer it INTO s3.

- You are also charged a certain amount to request data. If you are hosting popular static website using s3 then you may use a lot of requests .

S3 Object Versioning and MFA Delete

These are important to know for the solutions architect exam.

Object versioning - is configured at the bucket level. It is disabled by default, once enabled you cannot disable it again. You can suspend versioning on a bucket and then enable it again but you can't disable it. Suspending versioning just stops new versions being created but it does not delete existing versions. Versioning lets you store multiple versions of an object within a bucket. Any operations / actions which result in a change to an object generate a new version of the object and leave the old one in place. Without Versioning enabled the old object is just replaced by the new one.

Objects have both a KEY (its name eg cat-pic.jpg) and an ID which when Versioning is disabled is set to null. If you have versioning enabled and you have cat-pic.jpg in you bucket, it will be given an ID number. If you now uploaded another cat-pic.jpg rather than deleting the existing cat-pic.jpg and replacing it with this fresh upload, the new upload will be given a different ID and the original cat-pic.jpg will remain.

The newest version of an object in a versioning enabled bucket is known as the current version. If an object is accessed / requested without explicitly stating the ID of the object then it is always the current version that will be returned. However you have the option of specifying the ID to access a particular version of an object.

Versioning also impacts deletions - if you delete a versioned object it is not actually deleted. Instead AWS creates a new special version of the object called a delete marker essentially this hides all previous versions of the object and in effect deleting it. You can however delete the delete marker which then effectively undeletes all the previous versions of the object.

It is possible to actually delete an object completely. All you have to do is specify the ID of the object you want to delete. If the ID is for the current version then the previous version will be promoted to current version.

MFA Delete - A configuration setting for object versioning that when enabled causes MFA to be required to change any versioning state. Eg when making a bucket go from disabled -> enabled or enabled -> suspended for versioning.

- Can also require that MFA is used to delete any versions from a bucket.

S3 Performance Optimisation

Single Put vs Multipart Upload

- By default when you upload to S3 it is uploaded in a single blob, as a single stream. This is a Single Put Upload.

- This means if a stream fails the whole upload fails. If you need to upload from somewhere with unreliable internet this is a negative.

- A Single Put Upload is limited to 5GB of data.

The solution to this is using multipart upload. It does this by breaking data up into individual parts. The minimum amount of data to use multipart upload is 100mb. An upload can be split into a maximum of 10,000 parts ranging between 5MB and 5GB. The last part of data (leftover) can be smaller than 5MB if needed.

- Each individual part is treated as its own isolated upload which can fail and be restarted as an individual up load. This means if you were uploading 4.5GB of Data and one part fails the whole upload doesn't fail, you can just restart that one failed part saving time and money.

- Additionally this multipart upload massively improves Transfer Rate as the upload speed is sum of the speed of all the different parts being uploaded. This is much better than Single Put Upload where you are limited to the speed of the single stream.

S3 Transfer Acceleration

Without Transfer Acceleration if you were sending data from Australia to a bucket in the UK the data will travel over the public internet taking a route determined by ISPs - not necessarily the quickest route and often slowing down in areas as it hops across the globe to its final destination in the UK. This is not optimal.